machinesMemory

Keywords:

Data aesthetics, multiScreenInstallation, System,

intelligentAgent, dataVisualisation, imageProcess, Algorithms, Machine

Learning, TensorFlow, Raspberry Pi

Date:

Sept. 2020

Dimensions Variable

‘machinesMemory’ is a multi-screen art installation that presents the idea of a machine’s memory. Neural network algorithms bring with them new possibilities for artistic practices. Artists consider them to be independent entities that generate artworks beyond the realm of human control. The quote above presents the idea of the interconnectivity between objects created by humans and nature, and it led me to consider the connections between nature and humans along with nature and artificial intelligence-based machines. Building on this inspiration, this experiment comprises a preliminary exploration of the aesthetic values of machine learning algorithm constructions that includes, but is not limited to, the ontology of AI-based machines and the beautiful forms or patterns taken by machine-generated data. I want to emphasise that this project does not focus on the methodology of constructing a machine learning algorithm, such as in natural language processing, to generate a poem. Instead, I focus on using machine-generated data as material from which to create something new.

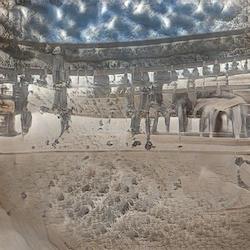

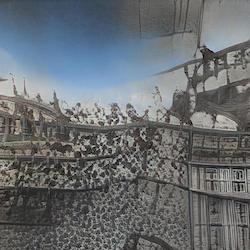

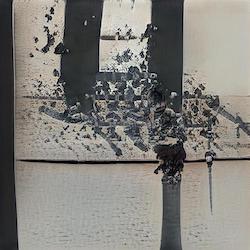

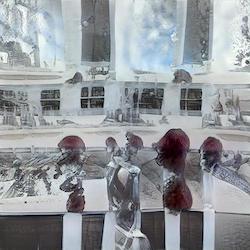

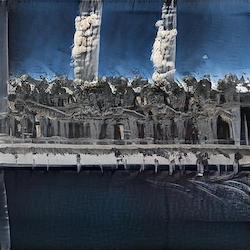

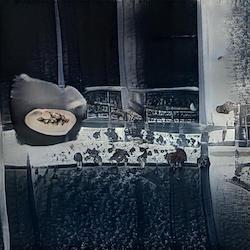

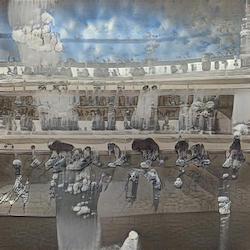

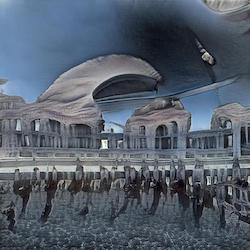

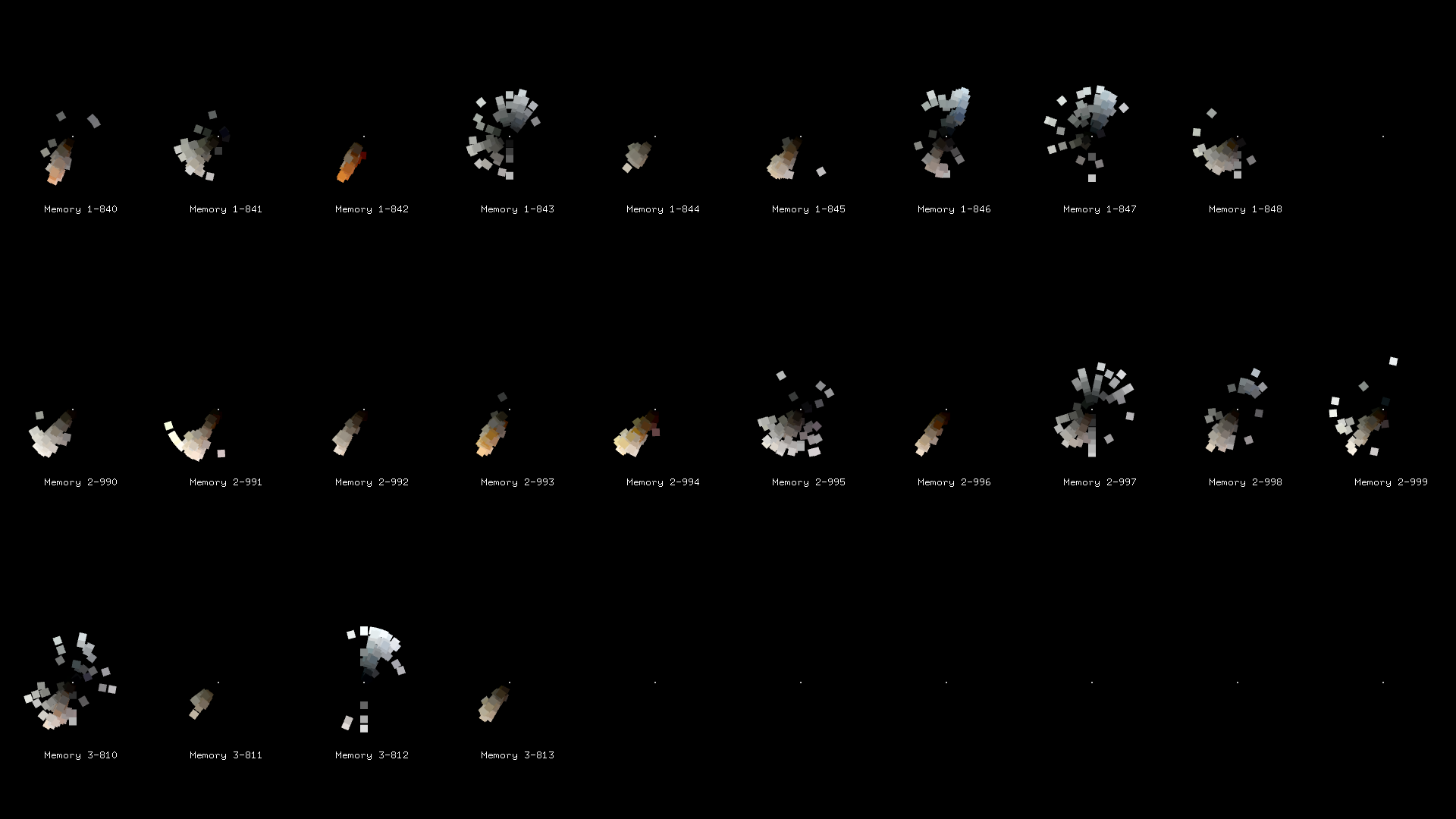

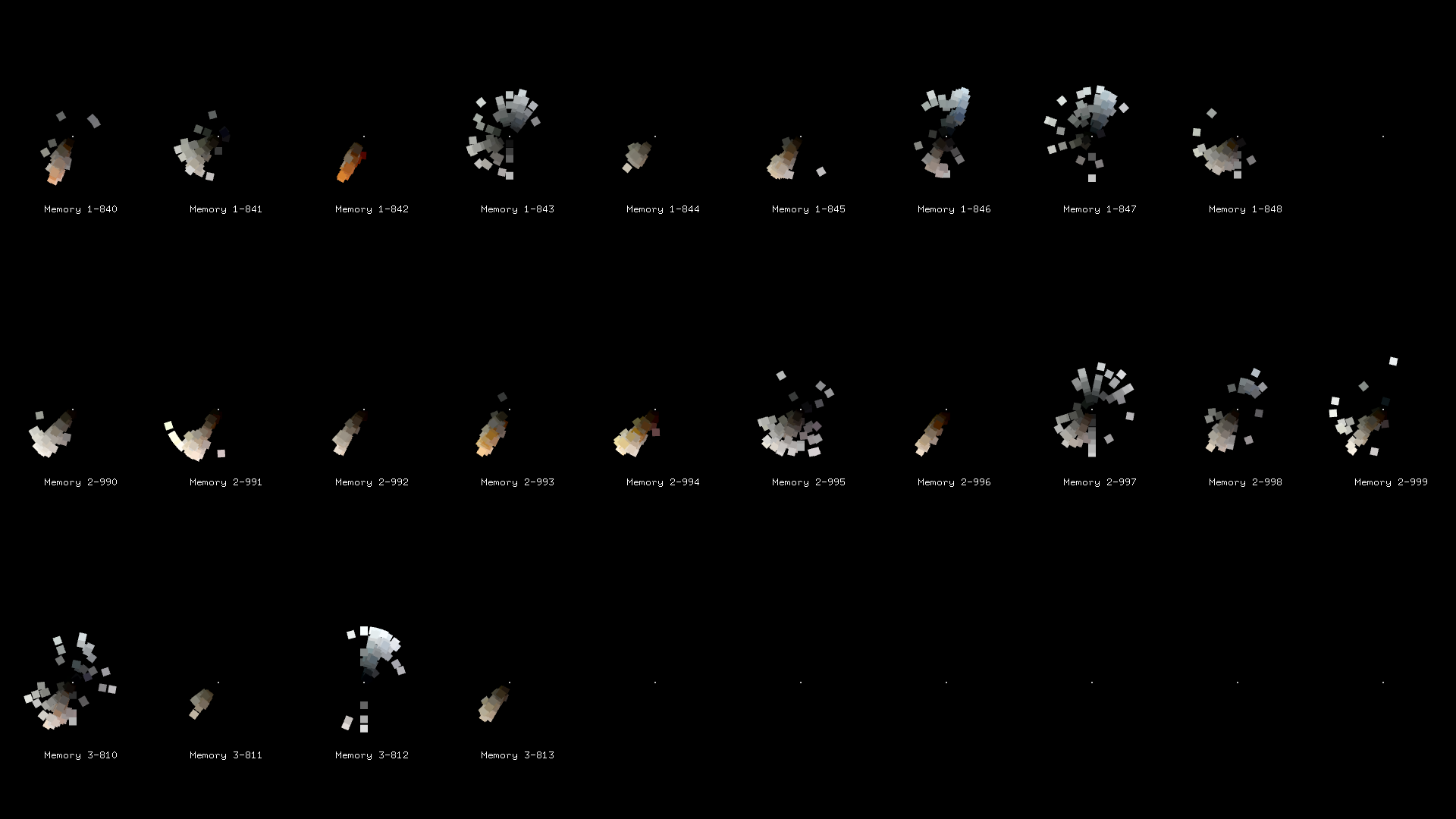

To emphasise the independence of machines, I collected 1,000 photos from the photo albums in my friends’ mobile phones that represent the idea of memory in an abstract way. I used this dataset to train the styleGAN deep learning model. After nine hours and the 1,000- step training, an abstract consequence resulted. Features from the original training set can be found in some machine generated images, but other parts of these generated images are rather abstract. Most of the images in the training set were landscape photos, hence some are recognisable (e.g. Italian landscapes); machines can certainly not possess such memories at the conscious level. However, from the perspective of ontology, we can imagine that these ‘memories’ do exist in some way.

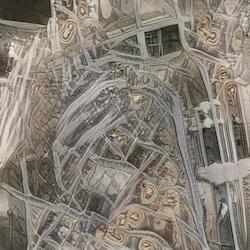

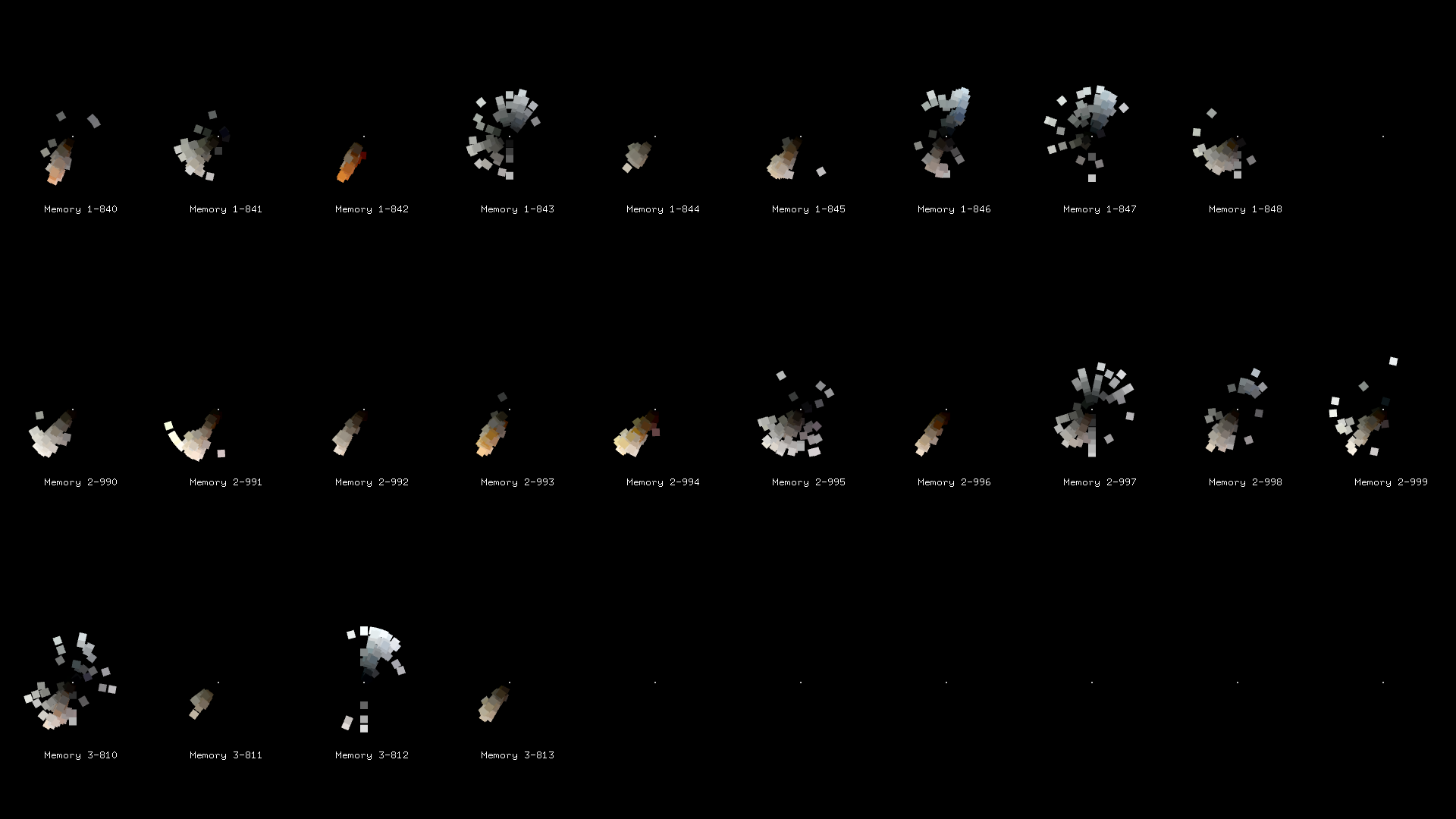

I spilt the feature points extracted from machine-generated images into different groups and assigned them an index under every ‘sample’. I refer to this as ‘a machine’s way’ because humans are unable to extract these feature points actively; they were extracted by machines themselves, but we know what those data represented.

What I want to emphasise in my project is the independence of the machine. I see the machine generated images, which belong to their owns, as materials. I am trying to find a way to understand what these images are. In the data visualisation projects we used to see, we know what every single data means in dataset. For example if we collect data from our body movements, we can know which data represents left hand, which data represents right hand. And then we can use these meaningful data to make some beautiful patterns based on some rules. However, as the theorist described, they called the machine generated data as a ‘black box’. What I am doing is getting inside the black box by a machine’s way, the image process algorithms, and then understanding these ‘meaningful’ data.